Having bots crawl on your website to disrupt activities, slow users down and make your website vulnerable to attacks could be really annoying. You’re not alone, though. Bots are everywhere on the web, representing almost half of the web traffic globally. In fact, 40% of web traffic comes from bots. Gross, right?

There are numerous kinds of internet bots that serve different purposes. However, a significant portion of bot traffic comes from bad bots with harmful objectives.

What does this mean?

Bad bots are significantly increasing and negatively impacting numerous online businesses and services. It is now a matter of urgency to block bot traffic on your website. Read on to find out what bots are and the best ways you can protect your website from them.

Contents

- What are bots, and how are they bad?

- Are there only bad bots?

- 8 best ways to protect your website from bots

- Take a bold stand against bots and safeguard your website

- Moyofade Ipadeola is a Content Strategist, UX Writer and Editor. Witty, she loves personal development and helping people grow. Mo, as she’s fondly called, is fascinated by all things tech.

What are bots, and how are they bad?

Bot, short for robot and also called internet bot, is an automated computer program designed to perform straightforward, repetitive actions over the internet. Bots often imitate or replace a human user’s behavior. They can run without specific instructions from humans and accomplish this task much faster than a human user ever could.

Are there only bad bots?

Regardless of what many people think, bots are not entirely bad. Good bots are owned by reputable organizations, such as Google, and help web users find relevant businesses, products, and services. They don’t hide their identities as bots, and they obey the rules of your website’s robots.txt file. Examples of good bots include search engine crawlers and price comparison bots.

However, bad bots are very sneaky. They try to disguise themselves as humans, thereby leading to all sorts of problems. They automate attacks and fraudulent activities. These bots can affect your website performance negatively, damage the experience of legitimate customers, and directly attack your business.

Here’s a list of the red flags that scream bad bots, which you must be cautious of.

- Login attempts from unknown and untraceable sources

- A sudden surge of sign-ups for your newsletter or other forms

- Excess comments on your blog and other related web pages

- Comments are not entirely easy to comprehend, with excessive linking and obvious spam

8 best ways to protect your website from bots

Now that you know what bots really are and how to identify them, the next step is knowing how to protect your website from bad bots. Read on to know how.

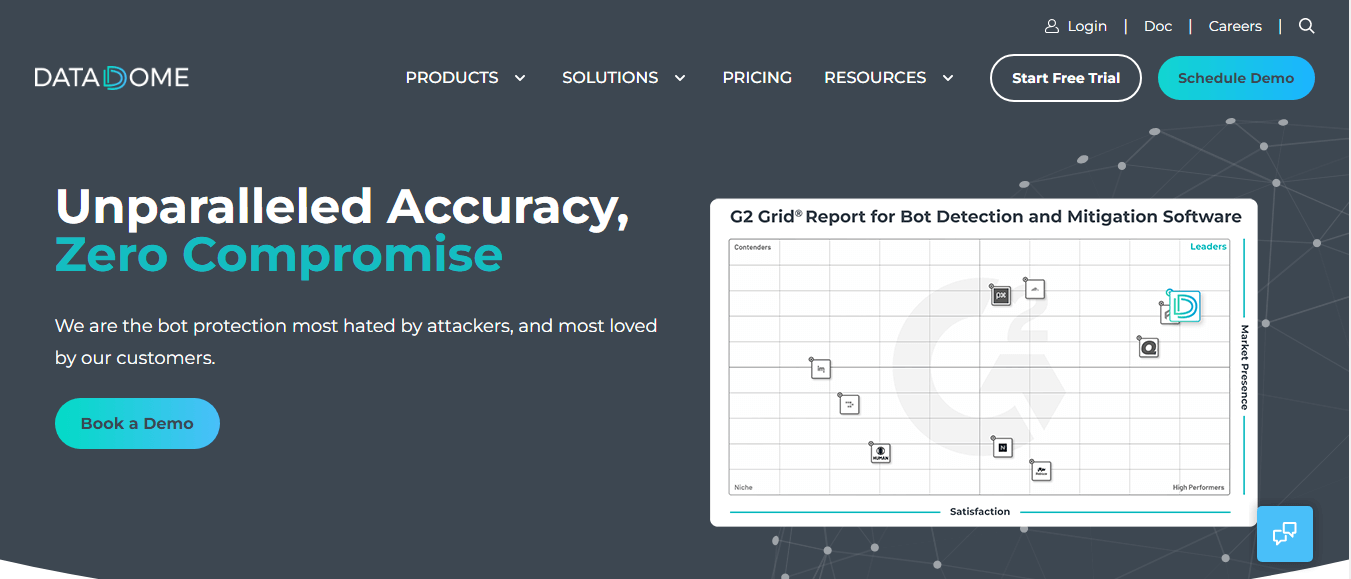

Invest in the right bot detection software

The one-stop solution to stop the bots from ruining your website is to invest in the right bot detection software. Bot detection software employs sophisticated algorithms to detect the routine of the malicious bots and differentiate them from human users while also ensuring that they eradicate the bots.

There are numerous software available to stop bots from exploiting inventory hoarding, credential stuffing, web application vulnerabilities, and launching DDoS attacks. This software is easy to install and does most of the work for you.

Use CAPTCHA method

CAPTCHA, an abbreviation for Completely Automated Public Turing test to tell Computers and Humans Apart, is a security effort that protects you from spam and other password decryption. Place the CAPTCHA on your web forms and login pages to prevent credential stuffing, spam content, and fake requests.

Most CAPTCHAs ask the user to perform an easy task, such as identifying a number or object from an image, which is a brilliant strategy to filter out most bots. CAPTCHA uses this strategy to prove that your visitors are human beings, not a computer trying to break into a password-protected account.

Here’s how you can use CAPTCHA strategically.

- Ensure you monitor your CAPTCHA closely. If you place a CAPTCHA on your website, see to it that the only requests you answer are the ones to which the users have provided the correct answers. Some websites place CAPTCHA in the front end and do not monitor in their back end if their users correctly answer the CAPTCHA. This encourages manipulation and exposes your website to bots and other malicious crawlers.

- Always remember that primitive CAPTCHA is very easy to bypass. Similarly, fill letters or other third-party services can help you solve your CAPTCHA. Thus, to prevent this and other cyber attacks, use sophisticated CAPTCHA like Google’s reCAPTCHA, which is very hard to bypass even by third-party services.

Set up Honeypots

Honeypot is a great way to expose vulnerabilities in your system. The honeypot works like an actual computer system, with data and applications fooling bots into thinking it’s a legitimate target. When bots want to navigate desired pages, they might blindly look for navigation links.

Once the bots are in, you can easily track them and assess their behaviors for information by developing web pages and links only accessible to web crawlers. Bots and other malicious crawlers will click on the URL and visit that page. By this, you’ll be able to gather intelligence on how to block them and protect your website from them in the future.

Scrutinize every bot access point

Bot access points are places on your websites that can offer bots and other malicious crawlers smooth access to your website and cause havoc. Here are some common access points and how to prevent bots from gaining entry to these points.

- API and other connections: APIs, plugins, and other third-party integrations might serve as areas of entry. The reason is that we allow most third-party integration to connect with and share data with our website. So, whether you’re hosting your first website or you already have a website, thoroughly check your third-party integrations and ensure each of them has solid security measures. Furthermore, get rid of all obsolete integrations and update the integrations to the latest versions.

- Unusual traffic spike: an unexplained traffic spike might not be the win you think it is. Every website owner should properly assess the situation when it occurs. If you notice traffic that’s not explained, it can indicate bad bot activity. If that is the case, spring into action immediately to prevent the bots from performing malicious activities on your website.

- Block unknown sites: ensure you block any unknown sites from getting into your website. Forbidding access from these sources is a great way to discourage attackers from getting into your site and protect it from bots.

Monitor login attempts closely

Another website security alert is getting too many login attempts into your site. This may look like your website is finally gaining traction. However, you must check why you have that many login attempts and why they’re failing. Once you are able to act swiftly, you might prevent the bots from getting access to your website.

Additionally, define your failed login attempt baseline and then monitor your websites for anomalies or spikes in login attempts. You should also set up alerts to automatically notify you if a failed login attempt occurs.

Keep up with relevant updates

Another easy but effective way to reduce the ways bots gain access to your website is by blocking older versions of browsers from accessing your website. To achieve this, keep your website and all other third-party integrations updated with the latest releases. For instance, if you use WordPress, you must ensure that you use plugins on your WordPress website and that your theme and plugins have the latest updates.

Keeping up with relevant updates has its advantages. First, bots may use older versions to gain access. Furthermore, the latest updates that the software development companies make constantly always come with increased security features and bot blocker options. Similarly, you can require your audience to update their browsers to the latest versions before they can view your website.

Don’t provide a reason for blocking any bot

Once you detect a bot and stop any activity on your website, the bot will ask for a reason. Rather than providing the exact reason or returning 429 errors with the right message, return other errors like 500 or answer with dummy data or different meaningless responses.

This is important because if the bot developers discover that their bot has been detected, they might look for other avenues to bypass detection in the future.

Don’t stop monitoring your website

Just like Rome wasn’t built in a day, protecting your website from bots isn’t a one-and-done situation. You’ll need to constantly monitor your website for the slightest problem. Consider taking your website assessment a notch higher by including it in your calendar to check in monthly or as often as you choose.

Additionally, ensure you watch out for announcements related to public data breaches. When large breaches occur anywhere, expect bad bots to use those data and information against your site more frequently. Once information like that gets out, take an internal look at your website and carefully spot any signs of bots and other malicious crawlers’ activities.

Take a bold stand against bots and safeguard your website

With bots getting more complicated than ever, finding the best ways to protect your website is no longer a luxury but an essential need of every web owner. The underlying effects of bots attack result in the loss of customers, a waste of limited time and resources, and even data breaches.

Investing in the right bot detection software is an excellent way to use AI technology to examine each bot’s behavior and discover unique ways to get rid of them. Similarly, measures such as using the CAPTCHA method, setting up honeypots, and keeping up with relevant updates are great ways of blocking the bots and protecting your website.

Moyofade Ipadeola is a Content Strategist, UX Writer and Editor. Witty, she loves personal development and helping people grow. Mo, as she’s fondly called, is fascinated by all things tech.